Archives

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- November 2021

- October 2021

- March 2021

- July 2020

- May 2020

- April 2020

- March 2020

- December 2019

- October 2019

- September 2019

- June 2019

- May 2019

- April 2019

- September 2018

- June 2018

The Aerial Perspective Blog

Mapware’s Photogrammetry Pipeline, Part 3 of 6: Structure from Motion

The first two steps in Mapware’s photogrammetry pipeline dealt with 2D images of a landscape. By the third step, structure from motion (SfM), Mapware finally begins to see that landscape in 3D.

Purpose of structure from motion

Until this point, Mapware’s photogrammetry pipeline has worked exclusively with 2D images. During the keypoint extraction step, Mapware identified distinctive features visible in each image. Then, during the homography step, Mapware sorted those images into pairs that overlap one another on the same feature. It also calculated the linear transformation (mathematical differences in scale and orientation) between the images in each pair.

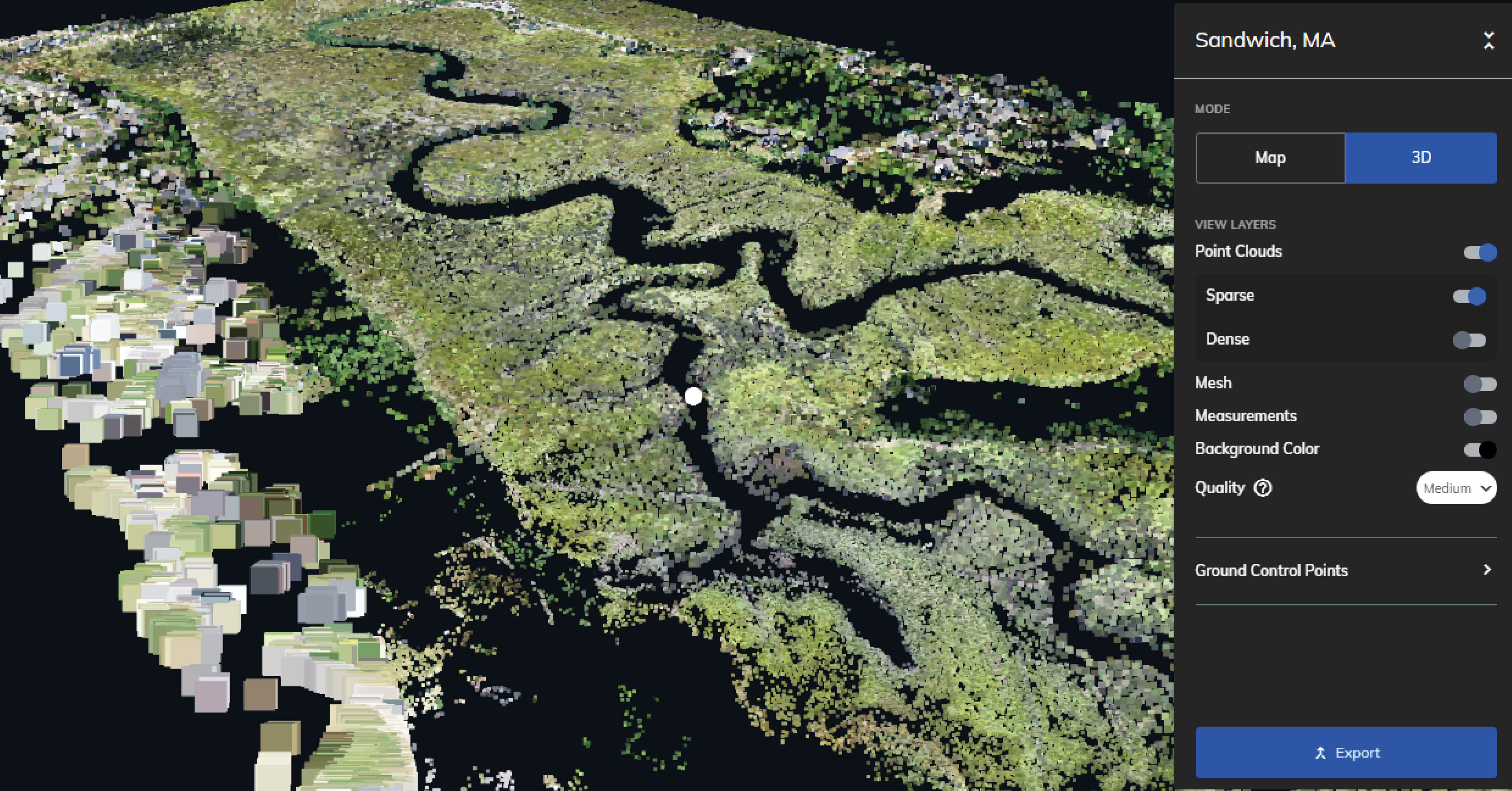

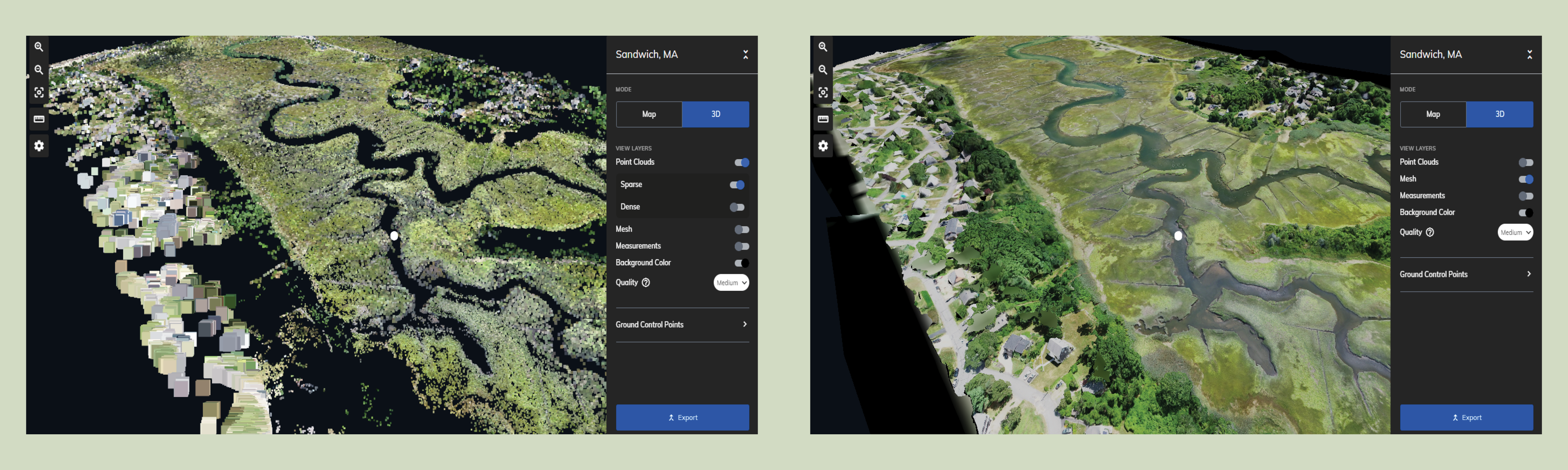

As a photogrammetry tool, Mapware must eventually make the leap from 2D to 3D. This starts to happen in the third step of its pipeline, called structure from motion (SfM). In this step, Mapware feeds all the image pairs it identified during homography into a low-resolution, sparse 3D representation of the landscape.

What does “structure from motion” mean?

The term “structure from motion” alludes to motion parallax, one of the ways humans perceive the world in 3D. As we move through space, our eyes see the same objects from different vantage points and our brains use that info to construct a mental 3D map of our environment.

In aerial photogrammetry, drones serve as a computer’s eyes—“walking” the computer through a landscape by taking overlapping photographs from above. And in the structure from motion step, Mapware serves as the computer’s brain by merging those images into a 3D model.

How Mapware creates sparse 3D models

To turn 2D photos into a sparse 3D model, Mapware must take the following steps:

Calculating linear transformation

Remember that, as drones fly over a landscape, they photograph the same feature multiple times from different distances and angles. Mapware addressed these scale/orientation differences differently in the first two steps of the photogrammetry pipeline.

In the keypoint extraction step, Mapware’s algorithms had to ignore any differences in scale and orientation. They had to know, for instance, that a feature in one image taken at close range was the same feature in another image taken from farther away. This way, it could assign both images nearly identical keypoints.

In the homography step, Mapware took a different approach and mathematically calculated these differences. As it identified image pairs with similar keypoints, it determined mathematically how the features in one image would have to be rotated and resized to perfectly match the features in another image. This calculation is called the linear transformation and is stored as a group of homography matrices.

The structure from motion step reuses this data. As Mapware adds each image pair to its 3D model, it uses the above homography matrices to undo differences in scale and orientation between the images. This ensures that the resulting 3D model will have a consistent scale.

Accessing camera metadata

In addition to calculating linear transformations between images, Mapware accesses metadata within each image to determine the camera’s focal length and to figure out its direction and distance from the subject. With this data, Mapware can accurately position the photos in 3D space.

Performing bundle adjustments

Even with the above homography calculations and camera data, Mapware is still estimating the locations of those drone cameras in 3D space. This is subject to what’s called the reprojection error and can seriously corrupt a model if not corrected. To minimize reprojection error, Mapware performs bundle adjustments at several intervals during the process.

After each image is added to the model, Mapware revisits the existing images and adjusts their 3D positions based on new information gained from the latest addition. These adjustments result in the best possible consensus between all images in the model. In other words, the entire model gets more accurate when it accepts new data.

Mapware’s SfM process

Here’s a basic look at the process from start to finish:

- Mapware selects a pair of images to serve as the basis of its model. It uses the homography matrices calculated before to try to pose the images together in the same scale/orientation, and then projects their keypoints into 3D space.

- Mapware sorts the remaining image pairs by priority and attempts to add them sequentially to the model. Higher priority is given to images with keypoints that are spread out across their surface, and to images with keypoints that match existing points in the model.**

- Mapware performs a cleanup step to remove bad data. This could mean images that failed several attempts at insertion to the model, pairs that were mistakenly matched during homography, points in the model that only occur in one image, or other outliers that could degrade the quality of the 3D model.

** NOTE: As mentioned above, Mapware performs bundle adjustments at several intervals during model reconstruction, including after each image is added and again after the final image has been added.

What comes next?

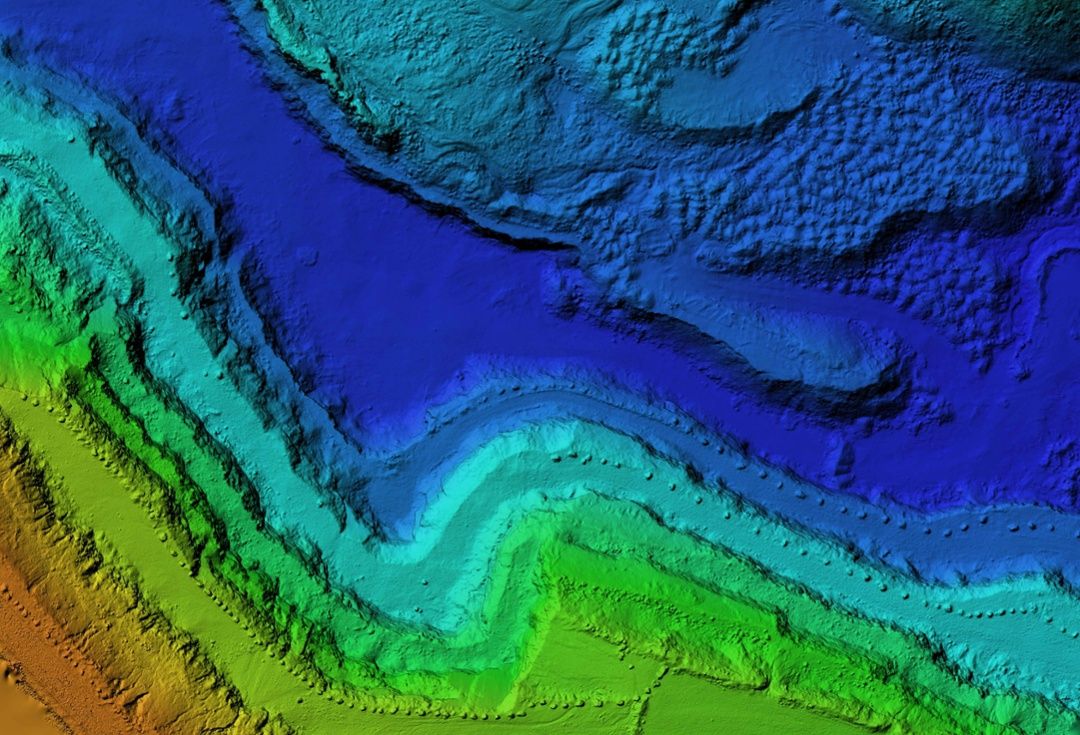

At this point, Mapware has a low-resolution, sparse 3D representation of the environment captured by drone photos. This is referred to as a sparse point cloud, and is the first indication about whether the drone image set will result in a high-quality 3D digital twin at the end of the pipeline.

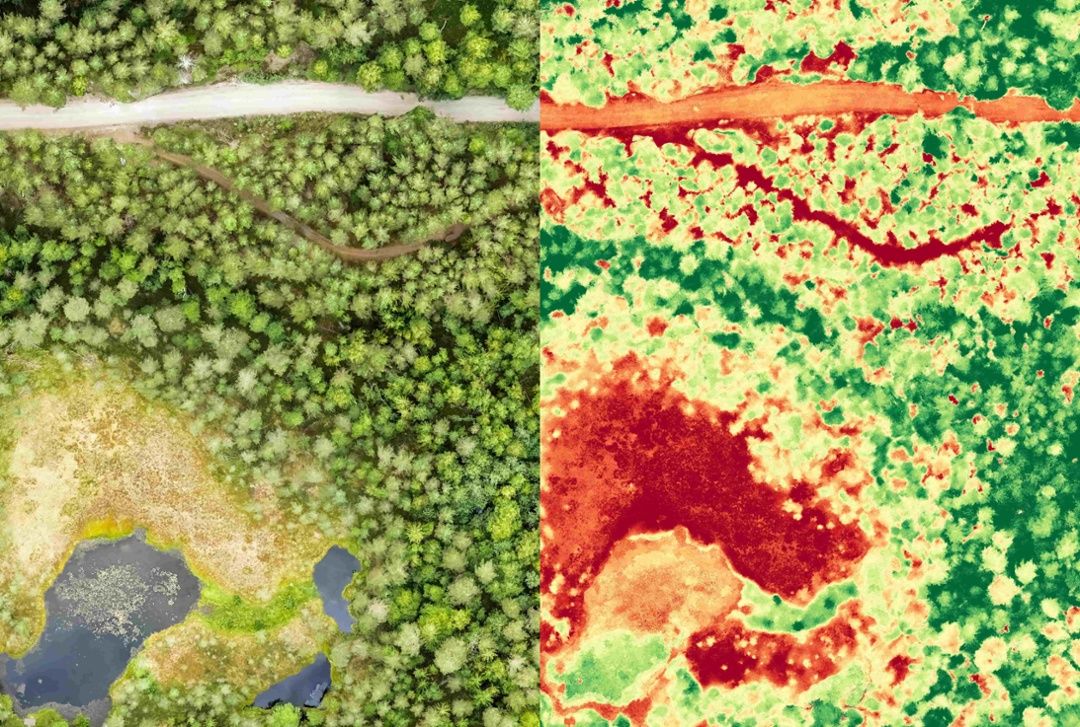

But Mapware will improve the accuracy of its sparse 3D model by creating depth maps—topographical maps showing elevation of each point in each image. We will discuss the depth mapping step in the next blog in this series.

Join our mailing list to stay up to date on the latest releases, product features and industry trends.

Mapware needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our Privacy Policy.