Mapware’s Photogrammetry Pipeline, Part 4 of 6: Depth Mapping

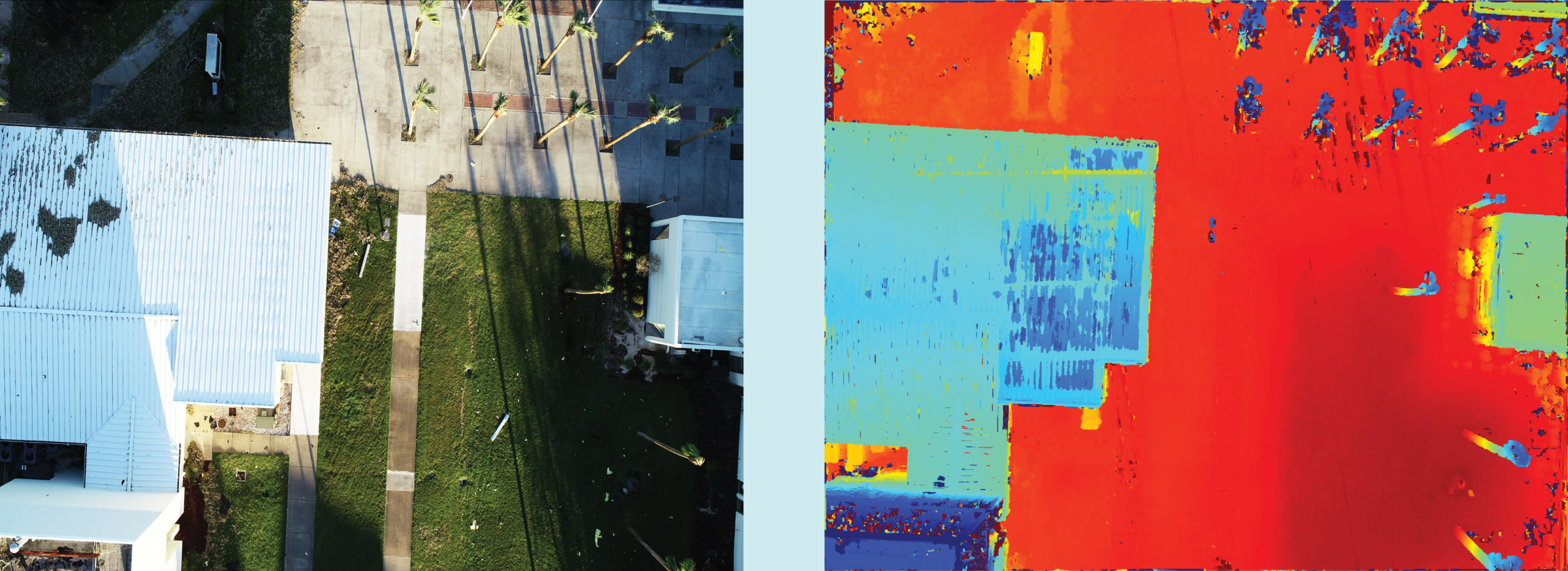

In the next step of the photogrammetry pipeline, Mapware improves upon the accuracy of its 3D point cloud by creating depth maps for each image showing the distance of each pixel from the camera.

Photogrammetry pipeline so far

Here’s a quick recap. In the keypoint extraction step, Mapware identified points of interest called keypoints in each image. In the homography step, Mapware collected pairs of images that overlap on the same keypoints. In the structure from motion (SfM) step, Mapware combined overlapping image pairs with metadata from each image file to estimate the spatial position of the camera. It then generated a low-resolution, sparse 3D point cloud.

The depth mapping step

A sparse point cloud is essentially a constellation of keypoints suspended in 3D space. While it conveys the overall shape of the landscape, it also has large gaps where no keypoints exist. Mapware still needs more data before it can generate a lifelike digital twin.

In the depth mapping step, Mapware uses its sparse point cloud to find the distance from the camera to each image pixel at the moment the image was captured. The result is a series of depth maps, and these will help Mapware fill in the spaces between keypoints to improve the accuracy of its 3D model.

What is a depth map?

Depth maps are 2D images that visually convey the distance of each pixel from the camera, usually through variations in light or color. For this reason, they can be thought of as “2.5-dimensional” images.

How are depth maps created?

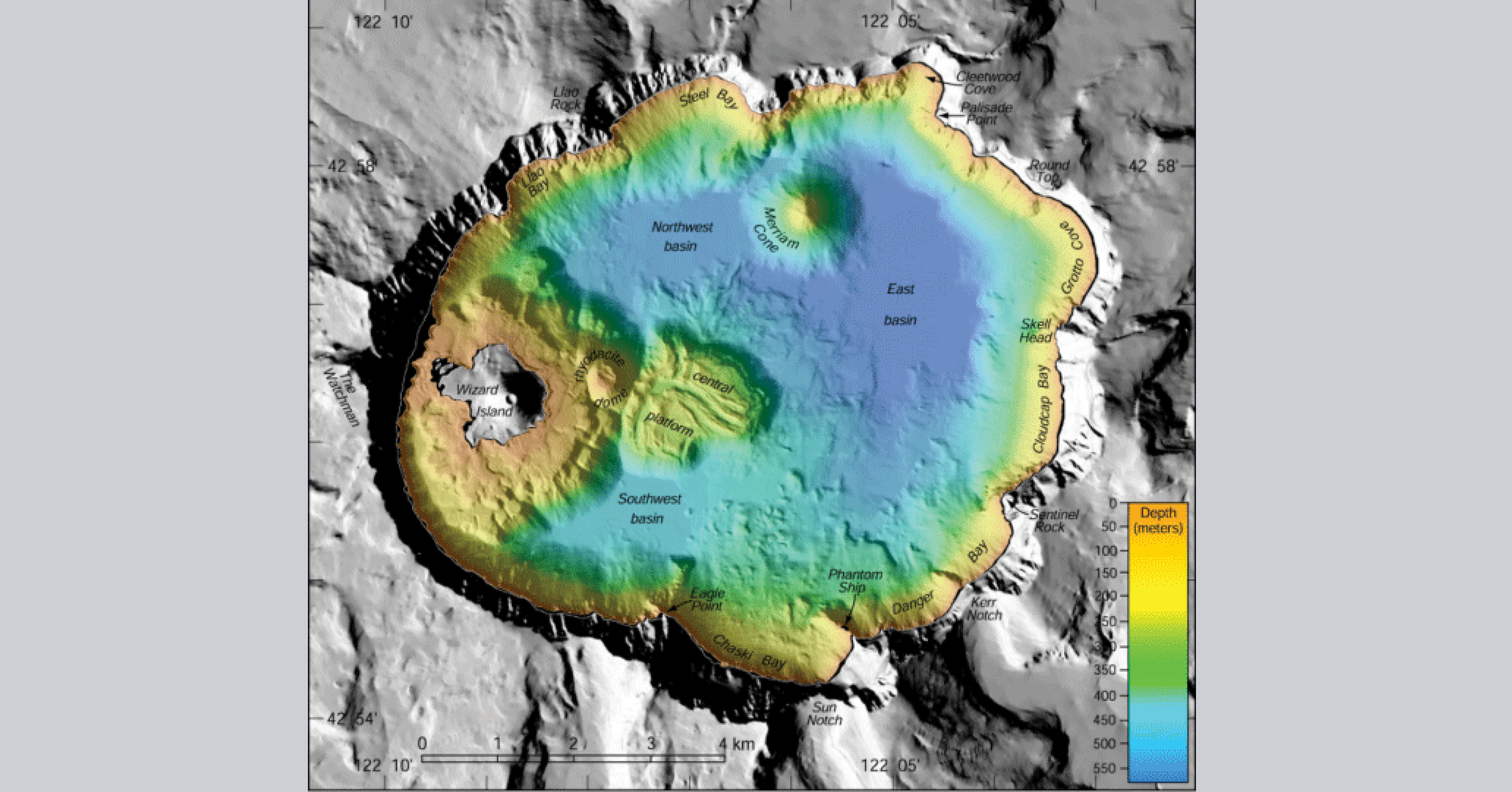

There are many ways to construct depth maps. Sonar equipment can directly emit sound waves toward a surface and reflect the time it takes for them to return. LiDAR equipment does something similar with light waves. Photogrammetry engines like Mapware use an indirect method, running algorithms on 2D images and 3D sparse point clouds to estimate depth.

Mapware’s depth mapping process

Assigning depth values to each pixel in an image is not easy, because each image is just one piece of a larger data set. Remember that even modest-sized 3D models are constructed from hundreds of images. And the drone camera takes each image from a different perspective. So, using the internal geometry of a single image may not convey the realistic depth of the landscape from all angles. Also, some features may be visible in one image and obstructed in the next. For these reasons, Mapware relies on multi-view stereo techniques, specifically pixel matching.

Multi-view stereo (MVS)

The basic idea of multi-view stereo is to see each point from as many different perspectives as possible to determine the best estimate of the location of that point. When Mapware selects an image from which to create a depth map, it also designates a group of adjacent images to act as references and inform its depth calculations.

Pixel matching

To identify reference images for each depth map, Mapware relies on pixel matching. It uses the camera perspectives identified during the SfM step to match pixels in 3D space between two images paired up during the homography step.

Once Mapware has identified enough reference images through pixel matching, it creates a reasonably accurate depth map of the source image. Mapware then repeats this pixel matching process to generate depth maps from the rest of the images.

Next steps

Once the depth mapping process is complete, Mapware combines all its depth maps into a final, high-resolution 3D dense point cloud. This happens during the fusion step, and we’ll discuss it in the next blog of this series.